Tesla has recently made a monumental and controversial decision (as is characteristic of Tesla).

What happened?

In a newly released Support article entitled, “Transitioning to Tesla Vision,” Tesla explained that beginning with May 2021 deliveries, radar will be removed from new Model 3 and Model Y cars sold in North America (US and Canada). The Model S and Model X are unaffected.

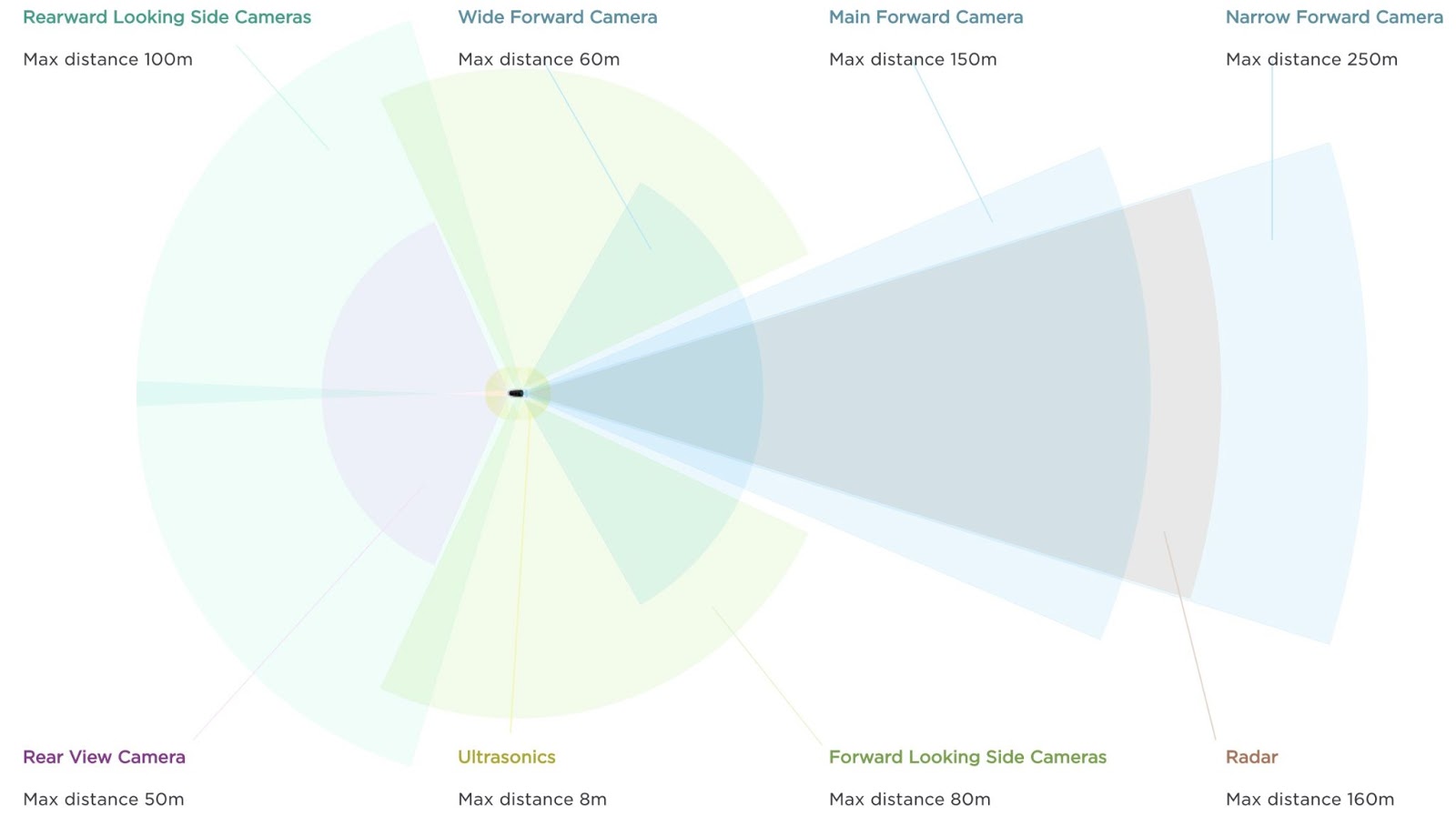

Existing Tesla cars have a front-facing radar to detect objects in front of the car, especially in bad weather conditions such as heavy rain, snow, and fog. The radar can detect objects up to 160 meters (525 feet) away.

These new Tesla cars without radar will have multiple features disabled for now (but will be restored through over-the-air updates):

- Autosteer will have a maximum speed of 75 mph

- Autosteer will have a longer minimum following distance (normal range is 1-7; the minimum distance is now 3)

- Smart Summon and Emergency Lane Departure Avoidance are disabled

- High beams will be automatically turned on at night time (theoretically, for better visibility for the cameras)

- The new no-radar Tesla cars will be limited in situations with poor visibility. One car owner driving in heavy rain had the car tell him: “Autopilot speed limited due to poor visibility” (see image below and video starting at 23:35 until 25:03). As the conditions worsened, the Autopilot forced the driver to take over.

Tesla’s decision to remove radar has already been met with strong criticism, outrage, and concern over safety, but before we evaluate/critique Tesla’s decision, we need to establish criteria (a big picture grid) for evaluating Tesla’s decision to remove radar.

Evaluating Tesla’s Decision from First Principles: What Is Necessary to Achieve Autonomous Driving?

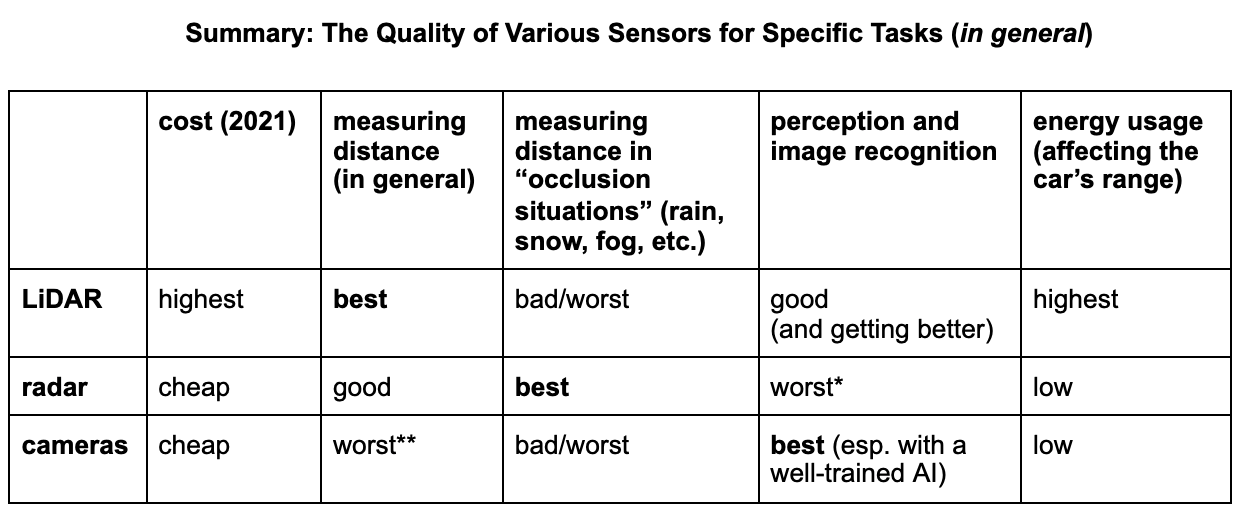

In a previous research article, we discussed Why LiDAR is doomed and unnecessary to achieve autonomous driving. In that article, we argued that different sensors (cameras, ultrasonics, LiDAR, radar) have different strengths and weaknesses, but that ultimately what is needed is both “eyes” AND “brains” for an autonomous car to drive safely in the real world.

Critics of Tesla have been focusing too much on hardware/sensors (the “eyes” of the car) rather than the more important issue of the car’s “brain,” or the process of teaching a computer how to drive in the real world.

The car must not only “see” the world around it, but also know what it is seeing (perception) and how to respond (artificial intelligence). Autonomous driving is fundamentally a software/artificial intelligence problem, NOT a hardware problem with sensors.

We can summarize the three steps to achieve autonomous driving:

(1) The car needs hardware/sensors for the purpose of . . . data gathering about surroundings.

- LiDAR (lasers operating in the visible light spectrum)

- Radar (radio waves operating outside the visible light spectrum)

- Cameras

- Ultrasonics (for near field object detection, 8 meters and less)

Every car needs hardware/sensors; the disagreement is over which one(s) and how many of each should be mounted on the car. Tesla is nearly alone in refusing to use LiDAR and now eliminating radar (NOTE: George Hotz of Comma.AI also believes that LiDAR is unnecessary and unable to solve the problem of perception/vision).

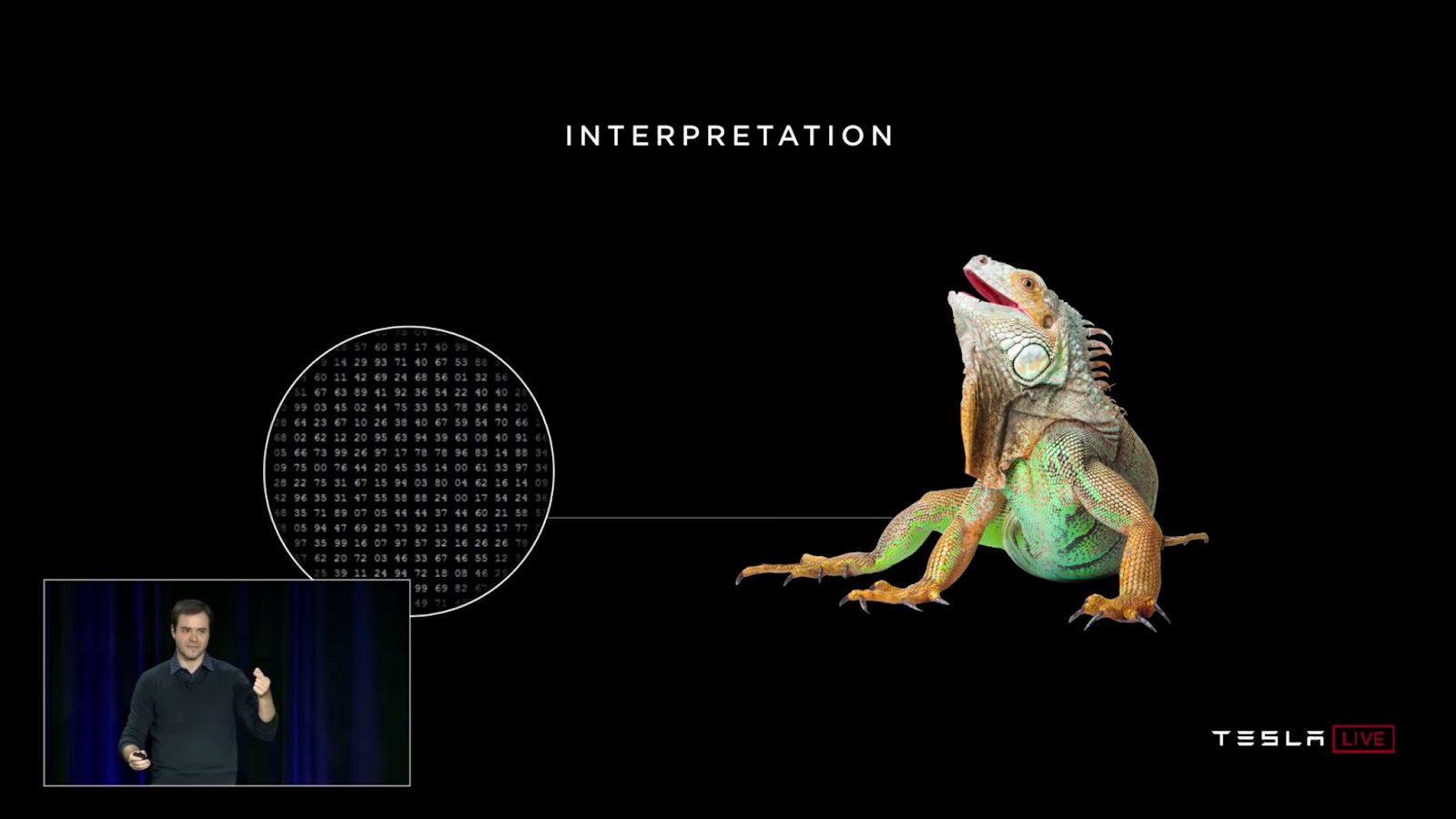

(2) Sensors exist for the purpose of . . . perception or interpretation (knowing what it sees).

Head of AI at Tesla, Andrej Karpathy, describes the problem of perception for computers:

“Images, to a computer, are really just a massive grid of pixels. And at each pixel, you have the brightness value of that point. So instead of seeing an image, a computer really gets a million numbers in a grid that tell you the brightness value at all the positions . . . So we have to go from that grid of pixels and brightness values into high-level concepts like iguana” (Andrej Karpathy, Tesla Autonomy Day, 1:54:43-1:55:06)

Data gathering through sensors is relatively easy; perception/interpretation is a software problem that is much more difficult and complex to solve.

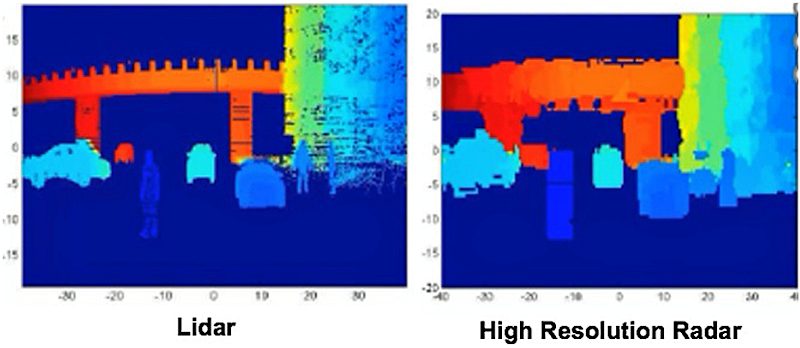

We must not mistake what we as humans are seeing from various sensors vs. what computers “see.” For example, to human eyes, the LiDAR certainly looks better, but the more fundamental question is whether the driving computer understands what it is “seeing”:

Instead of endlessly debating what hardware/sensors an autonomous car needs, we need to focus on who is developing the best software/artificial intelligence for autonomous driving.

(3) But perception is still secondary to the car’s ability to respond properly to what it sees (artificial intelligence/Deep Learning/machine learning).

It is useless for an autonomous vehicle to have a perfect understanding of its surroundings and even know exactly what it is seeing, yet not have a well-developed brain to know how to react (artificial intelligence).

.png)

First Principles Takeaway: Within this three-fold grid (data gathering through sensors; perception/interpretation; proper response) -- Tesla’s decision to remove radar falls into the first category. Tesla has removed a sensor. Allegedly, this compromises safety.

But the more important issue is asking: How does a change in the first category (data gathering) affect the second and third categories (perception/interpretation and proper response)?

Why did Tesla remove radar?

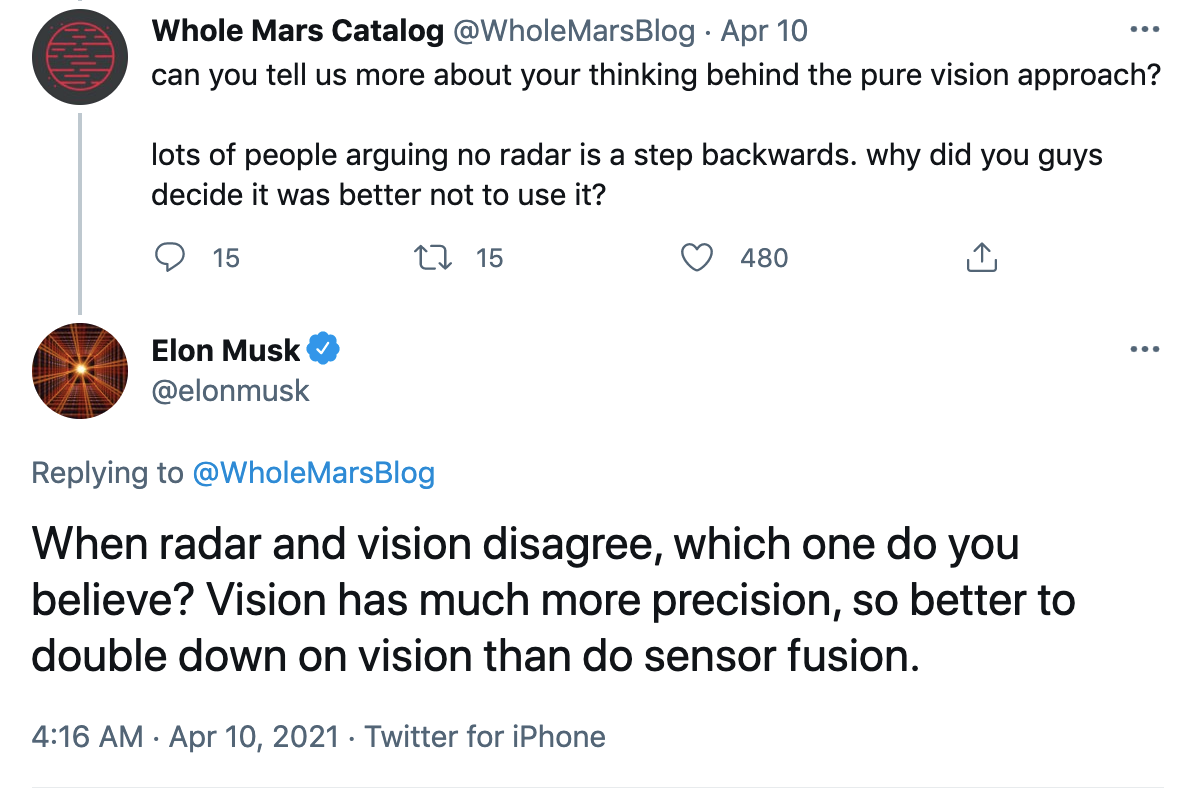

While everyone would appreciate a more detailed explanation from Tesla itself, Elon Musk has dropped hints about why Tesla removed radar in various tweets in April and May 2021 (see postscript below). What we can gather from all this information is a multi-part explanation for why Tesla removed radar:

1. Different sensors have different strengths and weaknesses. Having more sensors is not necessarily better because when sensors disagree, the computer still must prioritize one sensor over the others. Which sensor should be king?

2. While radar is especially good for “seeing” when vision is blocked, its weakness in perception/interpretation limits its overall usefulness.

3. Cameras can be taught to do what radar excels at doing -- gauging the distance of surrounding objects.

(1) Different sensors have different strengths and weaknesses. Having more sensors is not necessarily better because when sensors disagree, the computer still must prioritize one sensor over the others. Which sensor should be king?

We have documented these strengths and weaknesses in our Why LiDAR is doomed article, but in general...

*Arbe Robotics is developing high-resolution 4D imaging radar that will improve radar’s perception when compared to older radar technologies. However, the cost and quality of these new radars are unknown at this point.

**unless the cameras can be taught to measure distance via neural networks (as Tesla has done); in such a case, the cameras can be nearly as good as LiDAR at gauging distance.

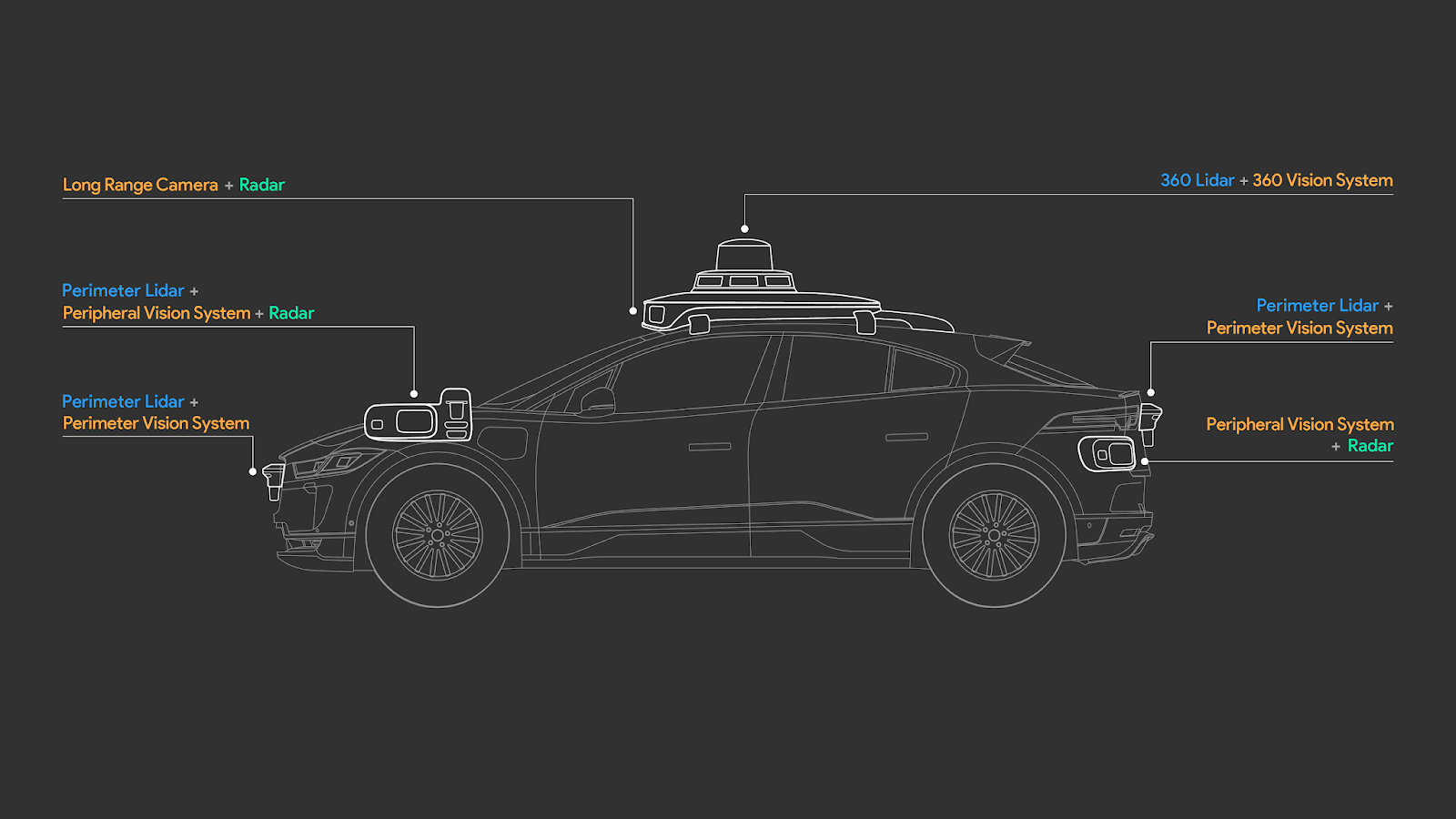

Because of these strengths and weaknesses, some autonomous driving companies decide to use as many sensors as possible. The fifth generation of Waymo cars use 5 LiDARs, 3 radars, and 6 cameras:

Waymo’s assumption seems to be that more is better. And indeed, this complex and large array of sensors does give the Waymo car a very detailed view of the car’s surroundings. But knowledge of surroundings is only the first step (vision); the car must also know what to do with all that data (interpretation/perception).

So what are the strengths/weaknesses of radar?

(2) While radar is especially good for “seeing” when vision is blocked, its weakness in perception/interpretation limits its overall usefulness.

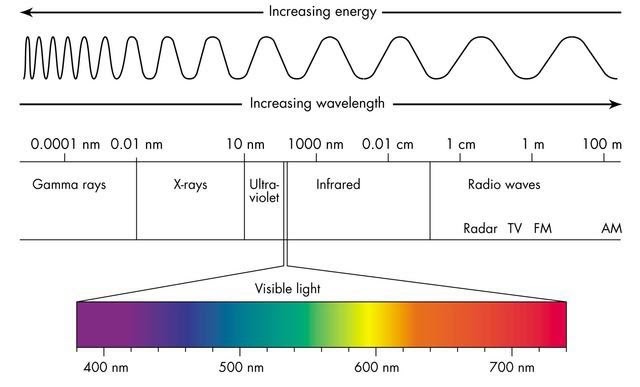

Radar has two main strengths: first, radar is excellent at detecting objects in “occlusion” situations: these are situations where vision is blocked, such as bad weather (rain, snow, fog), direct sunlight, low light, or dust and debris. In such conditions, cameras and lasers (LiDAR) are obstructed because they operate in the visible light spectrum. In contrast, radar operates in the radio wave spectrum and thus can “see” objects outside of the visible light spectrum.

Tesla CEO Elon Musk has acknowledged the usefulness of radar on multiple occasions, most notably during Tesla Autonomy Day in 2019:

“[Tesla] does have a forward radar, which is low-cost and is helpful especially for occlusion situations, so if there’s fog or dust or snow, the radar can see through that. If you’re going to use active photon generation [LiDAR], don’t use visible wavelength [e.g., 400-700 nanometers], because with passive optical [cameras], you’ve taken care of all visible wavelengths. [Rather] you want to use a wavelength that is occlusion penetrating like radar . . . At 3.8 millimeters [with radar] vs. 400-700 nanometers [with LiDAR], you’re gonna have much better occlusion penetration and that’s why we have a forward radar.” (Tesla Autonomy Day YouTube video, 2:34:08-2:34:39, lightly edited.

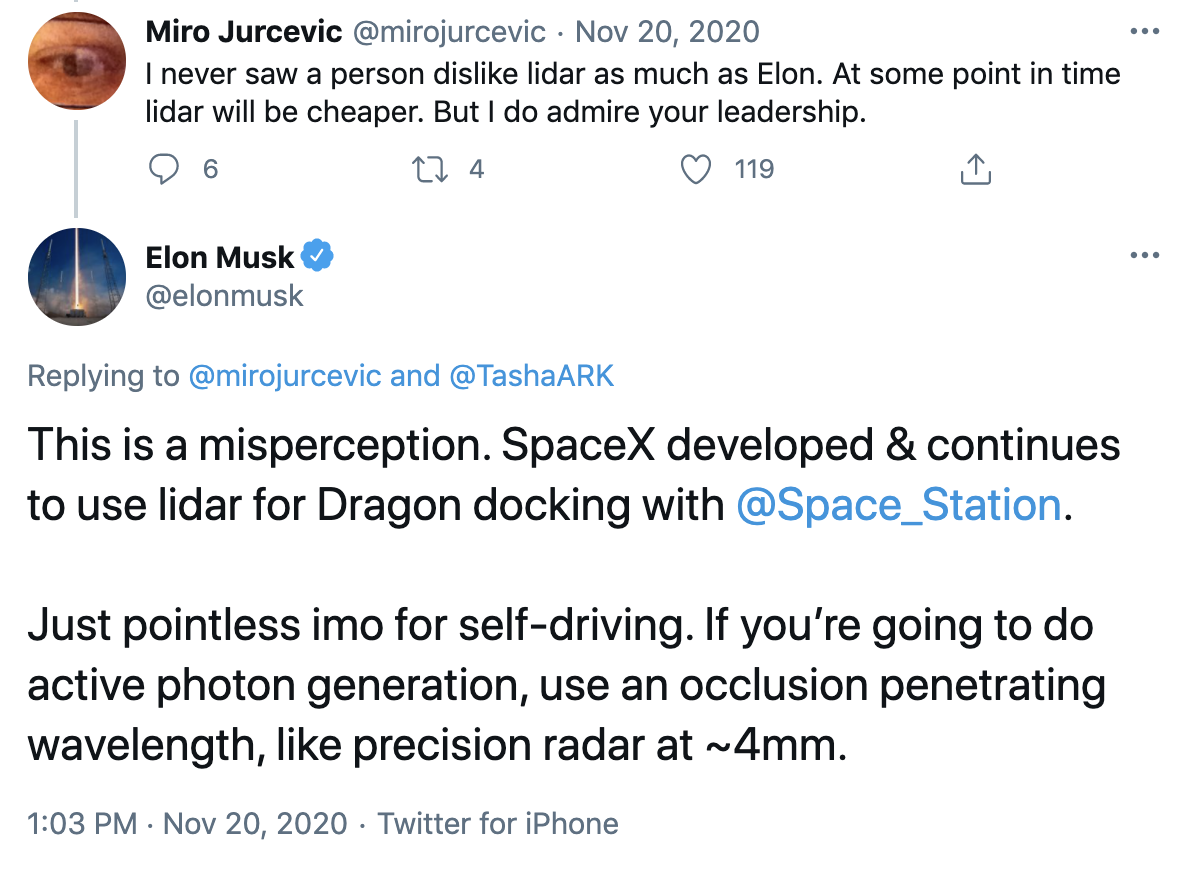

Also see Musk’s comments in the 2017 Q4 Earnings Call on February 7, 2018 and his comments in a November 20, 2020 tweet.

Radar’s second strength is that it can “see” two cars ahead by sending radio waves underneath the car directly ahead. Radar can send radio waves underneath the car in front and detect the distance and speed of the car “two cars ahead,” to see if that car might be stopping/stopped and cause an accident. Theoretically, this would help an autonomous car react more quickly than only having camera vision or LiDAR.

However, compared to cameras and LiDAR, radar’s main weakness is that it is not very data-rich: radar tells us that “something is ahead,” but gives very little information about “what that something is” (perception/interpretation).

This point was captured well in a tweet thread:

In other words, radar as a sensor (first category of data gathering) is weak at helping the computer understand what it is seeing (second category of perception/interpretation).

Put differently, Why would we make an inferior sensor our primary data gatherer?

(3) Cameras can be taught to do what radar excels at -- gauging the distance of surrounding objects.

A major objection to using camera vision only is that, according to critics, cameras are bad at gauging the distance and velocity of surrounding objects, so radar and/or LiDAR is needed.

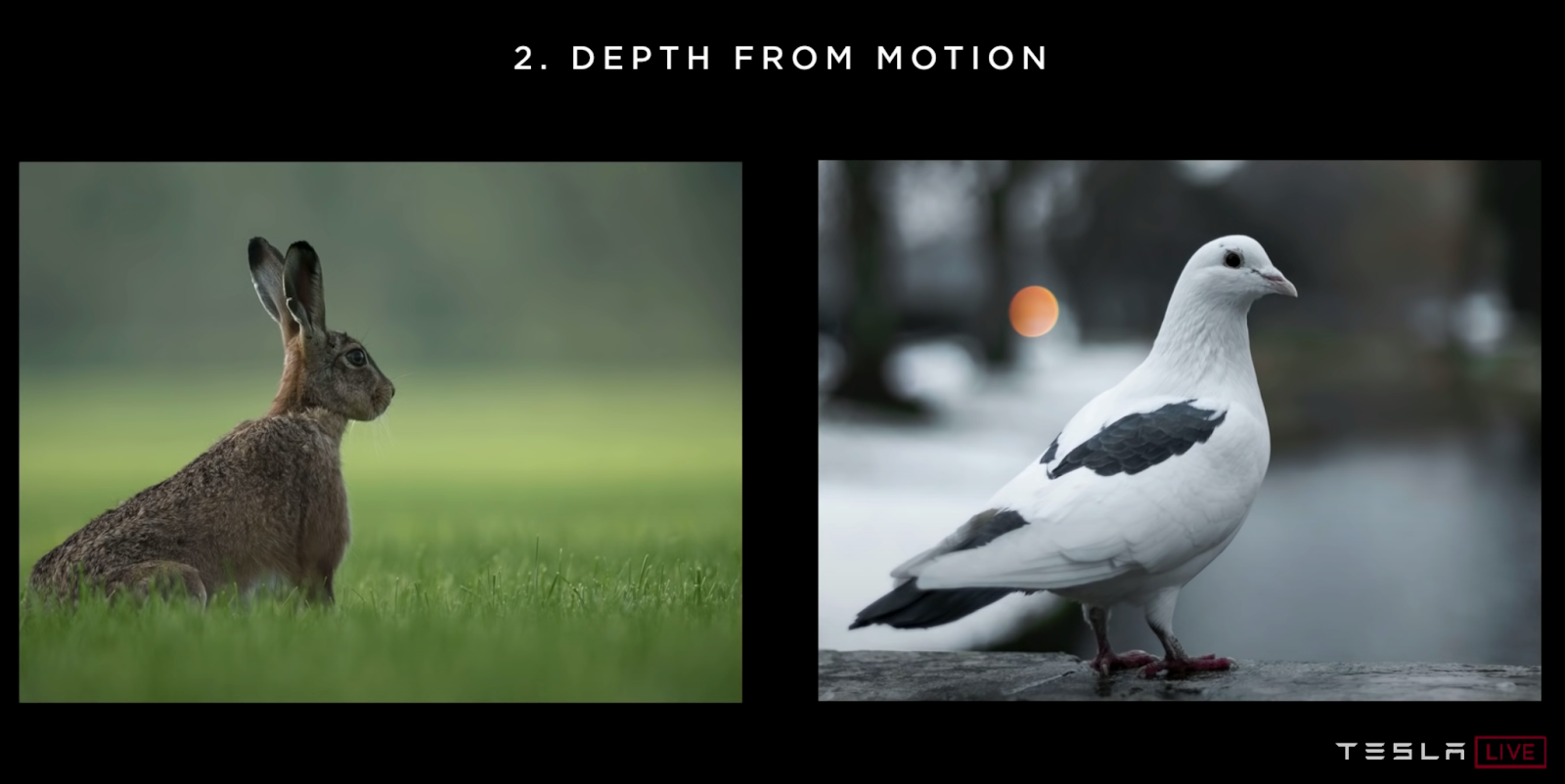

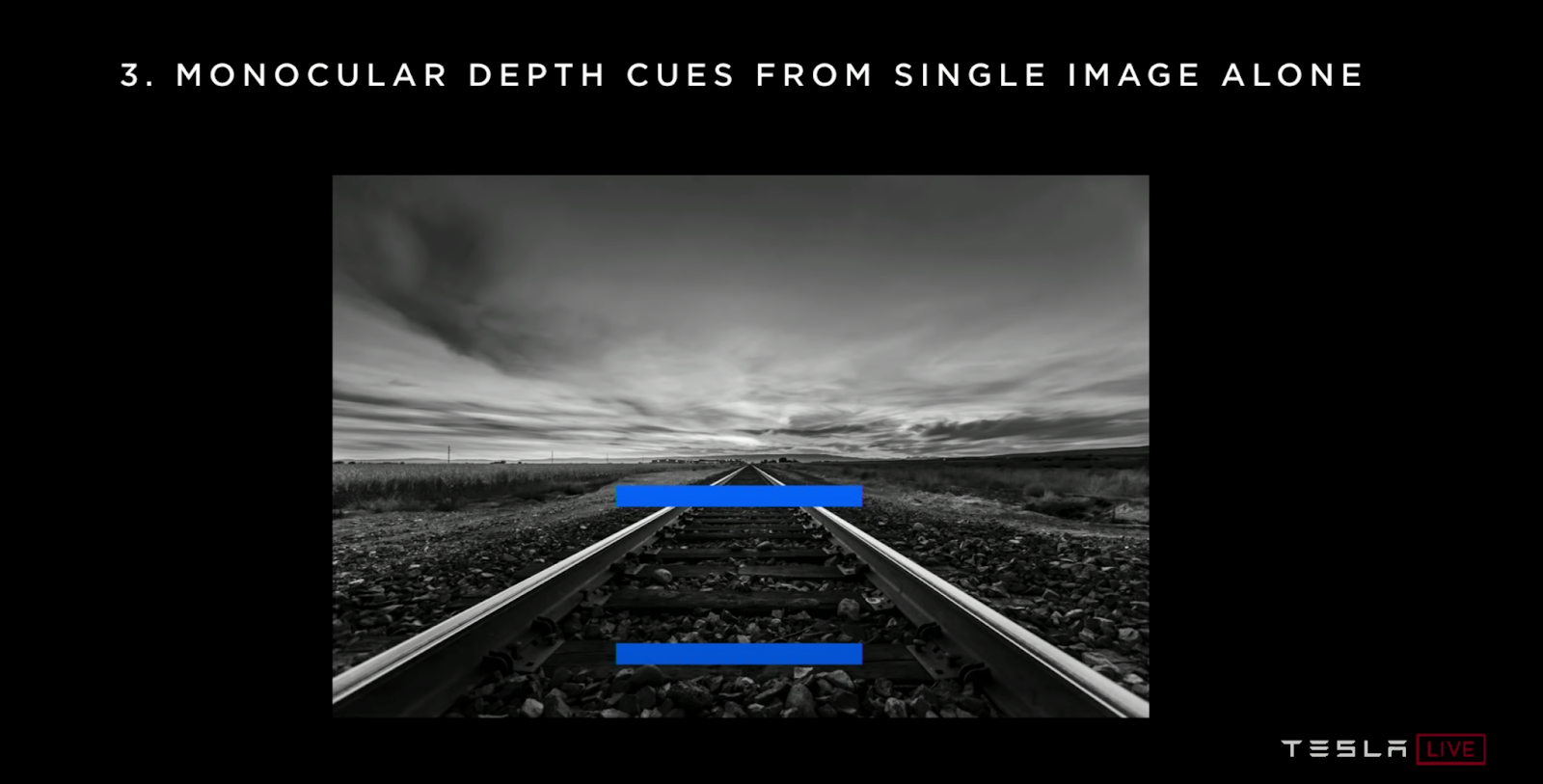

However, cameras can be taught to measure distance via numerous visual cues that train neural networks (as Tesla has done). Andrej Karpathy (during Tesla Autonomy Day, 2:16:45-2:22:19) explains how depth perception can be gained through: (1) multiple views like humans having two eyes; (2) from motion like certain animals who bob their heads to see better; and (3) from depth cues such as vanishing points:

With these ways of gauging distance, cameras can be nearly as good as LiDAR and radar at gauging distance. Radar and LiDAR will always be more precise than cameras, but the higher level of precision with radar and LiDAR is negligible for autonomous driving.

Conclusion #1: What does Tesla lose by removing radar? Is it safe to remove radar?

By removing radar, Tesla loses precise distance and velocity data in occlusion situations (bad weather, direct sunlight).

Many believe radar is crucial to safety. The question is, Can Tesla still gather distance/velocity data from its remaining sensor (cameras) in occlusion situations? Or better: can Tesla still gather sufficient data to drive safely in occlusion situations?

A proper response would be as follows:

(1) When multiple sensors are in disagreement, it is probably best to go with the most data-rich sensor (cameras).

Cameras provide a richer, fuller view of a car’s surroundings beyond the distance and velocity data provided by radar. The car must know what it is looking at (perception), and not merely how far and how fast some unknown object is going. When an autonomous car receives data about its surroundings, the car needs to distinguish between objects directly in the car’s path vs. objects outside of the car’s possible driving space. Furthermore, not all objects in a car’s path are dangerous: a plastic bag flying in the wind does not require emergency braking. When humans drive, we have to filter out a lot of visual data that is irrelevant to driving. And cameras are best suited for this filtering task.

(2) An autonomous car can be taught to adapt to situations with blocked vision and drive more cautiously, like humans do in such situations.

In bad weather or direct sunlight, humans drive more slowly, and try to be fully alert to hazards on the road. Yet, even the best of human drivers become distracted or tired. And all humans have visual blind spots (we can only look in one direction at a time).

In contrast, the driving computer in a Tesla car: (1) has no blind spots since eight cameras give 360 coverage; (2) the driving computer can maintain 100% alertness since it does not get distracted or tired; and (3) the driving computer can react quicker than a human can. These three advantages help maintain safety in an autonomous car.

(3) While an autonomous car with vision only will inevitably gather less comprehensive data than a car with radar, the car can still gather sufficient data to drive safely.

The problematic assumption of critics is that the car needs comprehensive data about its surroundings in order to drive safely. But humans do not drive with comprehensive data; we drive with a sufficient amount of data that allows us to accomplish the driving task safely.

In bad weather, cameras can still pick up obstacles when they can be visually identified, albeit slower than radar would. And when a hazard shows up via cameras in such situations, a computer can react more quickly than a human.

Conclusion #2: What does Tesla gain by removing radar?

By removing radar, Tesla gains a unified system for data gathering and perception that eliminates the problem of sensor disagreement and the challenge/complexity of sensor fusion.

This is the most important reason given by Musk himself:

We might wrongly assume that multiple sensors always agree, but that is not the case and in such situations, the computer must be taught what to do.

A well-documented situation where radar and cameras seem to disagree is when driving under a freeway/highway overpass. The following are from actual video captured by Tesla drivers who encountered phantom braking (i.e., braking for seemingly no good reason) while driving under an overpass:

In this situation, it is possible that radar thinks the overpass itself is an obstacle, so it warns the car to brake quickly. But cameras would tell us what it is seeing (perception) is an overpass, which is not a dangerous obstacle that the car will crash into. Rather, there is road space underneath the overpass that the car can safely drive through. Both radar and cameras have gathered data, but the camera’s data is more precise and more useful for the task of perception, which helps the car respond properly by continuing driving as opposed to braking suddenly.

Takeaway: Tesla is doubling down on its vision-only, data-centric approach

Tucked away at the bottom of its Transitioning to Tesla Vision article, Tesla explains why only the Model 3 and Model Y are having radar removed: “Model 3 and Model Y are our higher volume vehicles. Transitioning them to Tesla Vision first allows us to analyze a larger volume of real-world data in a short amount of time, which ultimately speeds up the roll-out of features based on Tesla Vision.”

Tesla delivered 182,847 Model 3/Y vehicles in Q1 2021, so we could estimate perhaps about 61,000 Model 3/Y vehicles today no longer have radar,* although the actual number is probably higher since Tesla continues to see growth in its delivery numbers. (*footnote: 182,847 divided by 3 months in a quarter = an average of 60,949 Model 3/Y cars sold in one month)

What this tells us is that Tesla wants to gather as much data as it can from radar-less cars, in order to train its driving computer. And Tesla can easily compare decisions made in similar situations by both cars with and without radar, to see which is better.

.png)

.png)